OpenTSDB was built at StumbleUpon, a company highly experienced with HBase. It’s a great example of how to build an application with HBase as its backing store. OpenTSDB is open source so you have complete access to the code. This article based on chapter 2 from HBase in Action explains how to design an HBase application.

This article is based on HBase in Action, to be published in Fall 2012. It is being reproduced here by permission from Manning Publications. Manning early access books and ebooks are sold exclusively through Manning. Visit the book’s page for more information.

Authors: Nick Dimiduk and Amandeep Khurana

While OpenTSDB could have been built on a relational database, it is an HBase application. It is built by individuals who think about scalable data systems in the same way HBase does. That’s not to say this approach is particularly mysterious – simply, it’s different from the way we typically think about relational data systems. This can be seen in both the schema design and application architecture of OpenTSDB. This article begins with a study of the OpenTSDB schema. For many of you, this will be your first glimpse of a nontrivial HBase schema. We hope this working example will provide useful insight into taking advantage of the HBase data model. After that, you’ll see how to use the key features of HBase as a model for your own applications.

While OpenTSDB could have been built on a relational database, it is an HBase application. It is built by individuals who think about scalable data systems in the same way HBase does. That’s not to say this approach is particularly mysterious – simply, it’s different from the way we typically think about relational data systems. This can be seen in both the schema design and application architecture of OpenTSDB. This article begins with a study of the OpenTSDB schema. For many of you, this will be your first glimpse of a nontrivial HBase schema. We hope this working example will provide useful insight into taking advantage of the HBase data model. After that, you’ll see how to use the key features of HBase as a model for your own applications.

Schema design

OpenTSDB depends on HBase for two distinct functions. The tsdb table provides storage and query support over time series data. The tsdb-uid table maintains an index of globally unique values for use as metric tags. We’ll first look at the script used to generate these two tables and then dive deeper into the usage and design of each one. First, the script:

Listing 1 Scripting the HBase shell to create the tables used by OpenTSDB

#!/bin/sh

# Small script to setup the hbase table used by OpenTSDB.

test -n "$HBASE_HOME" || { #A

echo >&2 'The environment variable HBASE_HOME must be set'

exit 1

}

test -d "$HBASE_HOME" || {

echo >&2 "No such directory: HBASE_HOME=$HBASE_HOME"

exit 1

}

TSDB_TABLE=${TSDB_TABLE-'tsdb'}

UID_TABLE=${UID_TABLE-'tsdb-uid'}

COMPRESSION=${COMPRESSION-'LZO'}

exec "$HBASE_HOME/bin/hbase" shell < 'id', COMPRESSION => '$COMPRESSION'}, #B

{NAME => 'name', COMPRESSION => '$COMPRESSION'} #B

2

For Source Code, Sample Chapters, the Author Forum and other resources, go to

http://www.manning.com/dimidukkhurana/

create '$TSDB_TABLE', #C

{NAME => 't', COMPRESSION => '$COMPRESSION'} #C

EOF

#A From environment, not parameter

#B Makes the 'tsdb-uid' table with column families 'id' and 'name'

#C Makes the 'tsdb' table with the 't' column family

The first thing to notice is how similar the script is to any script containing Data Definition Language (DDL) code for a relational database. The term DDL is often used to distinguish code that provides schema definition and modification from code performing data updates. A relational database uses SQL for schema modifications; HBase depends on the API. The most convenient way to access the API for this purpose is through the HBase shell. It provides a set of primitive commands wrapping the API, including the table manipulation class hierarchy starting at HBaseAdmin.

Declaring the schema

The tsdb-uid table contains two column families, named id and name. The tsdb table also specifies a column family, named simply t. Column families will be described later in more detail. For now, just think of them as namespaces for columns. Notice the lengths of the column family names are all pretty short. This is due to an implementation detail of the on-disk storage format of the current version of HBase – in general, shorter is better. Finally, notice the lack of a higher-level abstraction. Unlike most popular relational databases, there is no concept of table groups. All table names in HBase exist in a common namespace managed by the HBase master.

That’s it. No, really, that’s it. This is why HBase is often referred to as a schemaless database. Unlike SQL, there are no column definitions or types. All data stored in HBase goes in and comes out as an array of bytes. Making sure the value read out of the database is interpreted correctly is left up to the consuming application. HBase has no type system (HBase does support an atomic increment command that assumes the cell value is a whole number) and thus the client libraries aren’t littered with methods dedicated to translation between language primitive types and database primitive types. Rows of data in HBase are identified by their rowkey, an assumed field on all tables. Just like HBase is not in the business of managing data types, it’s also not in the business of managing relationships. There are no predicates describing cardinalities, column relationships, or other constraints. HBase is in the business of providing consistent, scalable, low-latency (fast!), random access to data.

Now that you’ve seen how these two tables are created in HBase, let’s explore how these tables are actually used.

The tsdb-uid table

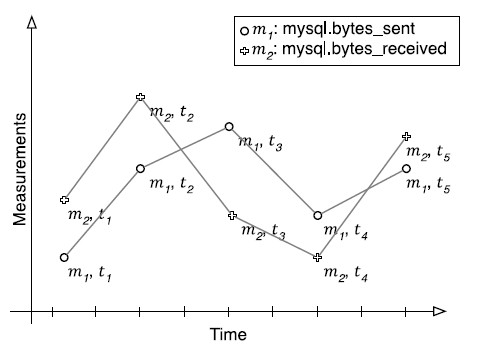

While this table is ancillary to the tsdb table, we explore it first because understanding why it exists will provide insight into the overall design. The OpenTSDB schema design is optimized for the management of time series measurements and their associated tags. By “tags,” we mean anything used to further identify a measurement recorded in the system. In OpenTSDB this includes the observed metric, the metadata name, and metadata value. It uses a single class, UniqueId, to manage all of these different tags, hence ‘uid’ in the table name. Each metric in figure 1, mysql.bytes_sent and mysql.bytes_received, receive their own UID in this table.

Figure 1 Time series, a sequence of time-ordered points. Two time series on the same scale rendered on the same graph.

The timestamp is commonly used as an X-axis value when representing a time series visually. The tsdb-uid table is for UID management. UIDs are of a fixed 3-byte width and used in a foreign key kind relationship from the tsdb table; more on that later. Registering a new UID results in two rows in this table, one mapping tag name-to-UID, the other is UID-to-name. For instance, registering the mysql.bytes_sent metric generates a new UID used as the rowkey in the UID-to-name row. The name column family for that row stores the tag name. The column qualifier is used as a kind of namespace for UIDs, distinguishing this UID as a metric (as opposed to a metadata tag name or value). The name-to-UID row uses the name as the row key and stores the UID in the id column family, again qualified by the tag type. Listing 2 shows what this table looks like with a couple metrics registered.

Listing 2 Registering metrics in the tsdb-uid table

hbase@ubuntu:~$ tsdb mkmetric mysql.bytes_received mysql.bytes_sent

metrics mysql.bytes_received: [0, 0, 1]

metrics mysql.bytes_sent: [0, 0, 2]

hbase@ubuntu:~$ hbase shell

> scan 'tsdb-uid', {STARTROW => "\0\0\1"}

ROW COLUMN+CELL

\x00\x00\x01 column=name:metrics, value=mysql.bytes_received

\x00\x00\x02 column=name:metrics, value=mysql.bytes_sent

mysql.bytes_received column=id:metrics, value=\x00\x00\x01

mysql.bytes_sent column=id:metrics, value=\x00\x00\x02

4 row(s) in 0.0460 seconds

>

The name-to-UID rows enable support for autocomplete of tag names. OpenTSDB’s UI allows a user to start typing a UID name and it will populate a list of suggestions with UIDs from this table. It does this using an HBase row scan bounded by rowkey range. Later, you’ll see exactly how that code works.

The tsdb table

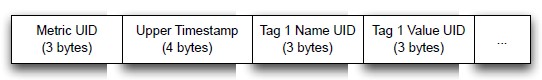

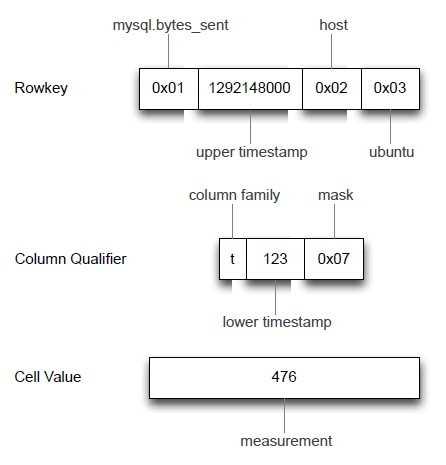

This is the heart of the time series database—the table that stores time series of measurements and metadata. This table is designed to support queries of data filtered by date range and tag. This is accomplished through careful design of the rowkey because it is indexed by HBase. Indexes over arbitrary columns are supported through the Secondary Indexing feature. The rowkey is the only data field indexed by default and it is always indexed. Figure 2 illustrates the rowkey for this table. Take a look and then we’ll walk through it.

Figure 2 The layout of an OpenTSDB rowkey consists of 3 bytes for the metric id, 4 bytes for the timestamp, and 3 bytes each for the tag name id and tag value id, repeated.

Remember the UIDs generated by tag registration in the tsdb-uid table? They are used here in the rowkey of this table. OpenTSDB is optimized for metric-centric queries so the metric UID comes first. HBase stores rows ordered by rowkey, so the entire history for a single metric is stored as contiguous rows. Within the rows for a metric, it is ordered by timestamp. The timestamp in the rowkey is rounded down to the nearest 60 minutes so a single row stores a bucket of measurements for the hour. Finally, the tag name and value UIDs come last in the rowkey. Storing all these attributes in the rowkey allows them to be considered while filtering search results. You’ll see exactly how that’s done shortly.

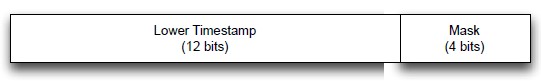

With this row key business sorted, let’s look at how measurements are stored. Notice the schema contains only a single column family, t. This is because HBase requires a table to contain at least one column family. This table doesn’t use the column family to organize data but HBase requires one all the same. OpenTSDB uses a 2-byte column qualifier consisting of two parts: the rounded seconds in the first 12 bits and a 4-bit bitmask. The measurement value is stored on 8 bytes in the cell. Figure 3 illustrates the column qualifier.

Figure 3 Column qualifiers store the final precision of the timestamp as well as a bitmask. The first bit in that mask indicates whether

the value in the cell is an integer or float value. How about an example? Let’s say you’re storing a mysql.bytes_sent metric measurement of 476 observed on Sun, 12 Dec 2010 10:02:03 GMT for the ubuntu host. We previously stored this metric as UID 0x1, the host tag name as 0x2 and the ubuntu tag value as 0x3. The timestamp is represented as a UNIX epoch value of 1292148123. This value is rounded down to the nearest hour and split into 1292148000 and 123. The rowkey and cell inserted into the tsdb table is shown in figure 4. Other measurements collected during the same hour for the same metric on the same host are all stored in other cells in this row.

Figure 4 An example rowkey, column qualifier, and cell value storing 476 mysql.bytes_sent at 1292148123 seconds in the tsdb table.

It’s not often we see this kind of bitwise consideration in Java applications, is it? Much of this is done as either a performance or storage optimization. For instance, bucketing measurements hourly serves two purposes. It reduces the number of reads necessary to retrieve a time series from one per observation to one per hour of observations. It also removes duplicated storage of the higher-order timestamp bits. The first consideration reduces the load when querying data to be rendered in the graph and the second reduces the storage space consumed substantially.

Now that you’ve seen the design of an HBase schema, let’s look at how to build a reliable, scalable application using the same methods as those used for HBase.

Application architecture

While pursuing study of OpenTSDB, it’s useful to keep these HBase design fundamentals in mind:

* Linear scalability over multiple nodes, not a single monolithic server.

* Automatic partitioning of data and assignment of partitions.

* Strong consistency of data across partitions.

* High availability of data services.

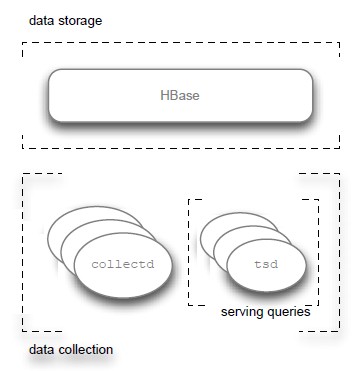

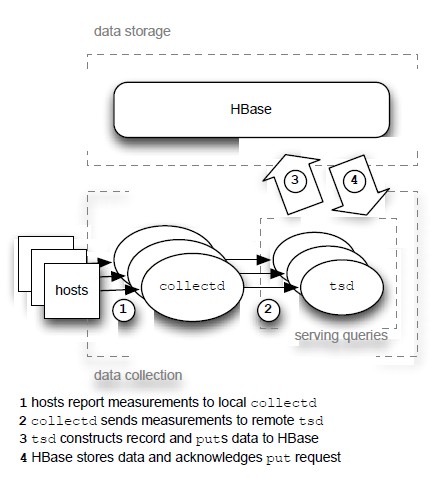

High availability and linear scalability are often primary motivators behind the decision to build on HBase. Very often, the application relying on HBase is required to meet these same requirements. Let’s look at how OpenTSDB achieves these goals through its architectural choices. Figure 5(a) provides a view of that architecture.

Figure 5(a) OpenTSDB architecture: separation of concerns. The three areas of concern are data collection, data storage, and serving queries.

Conceptually speaking, OpenTSDB has three responsibilities: data collection, data storage, and serving queries. As you might have guessed, data storage is provided by HBase, which already meets these requirements. How does OpenTSDB provide these features for the other responsibilities? Let’s look at them individually and then you’ll see how they’re tied back together through HBase.

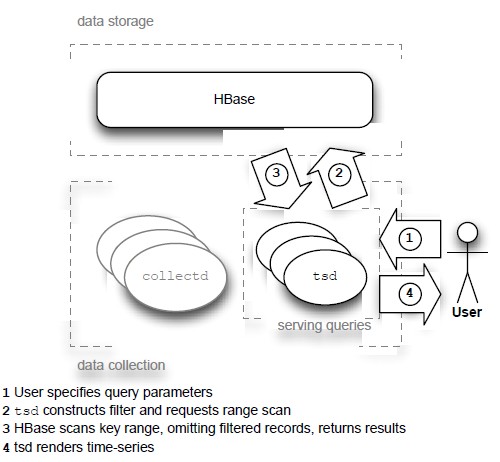

Serving queries

OpenTSDB ships a process called tsd for handling interactions with HBase. It exposes a simple HTTP interface for serving queries against HBase. The tsd HTTP API is documented at http://opentsdb.net/http-api.html. Requests can query for either metadata or an image representing the requested time series. All tsd processes are identical and stateless so high availability is achieved by running multiple tsd machines. Traffic to these machines can be routed using a load balancer, just like striping any other HTTP traffic. A client does not suffer from the outage of a single tsd machine because the request is simply routed to a different one.

Each query is self-contained and can be answered by a single tsd process independently. This allows OpenTSDB read support to achieve linear scalability. Support for an increasing number of client requests is handled by running more tsd machines. The self-contained nature of the OpenTSDB query has the added bonus of making the results served by tsd cacheable in the usual way. Figure 5(b) illustrates the OpenTSDB read path.

Figure 5(b) OpenTSDB read path. Requests are routed to an available tsd process that queries HBase and serves the results in the appropriate format.

Data collection

Data collection requires boots on the ground. Some process somewhere needs to gather data from the monitored hosts and store it in HBase. OpenTSDB makes data collection linearly scalable by placing the burden of collection on the monitored hosts. Each machine runs local processes that collect measurements and each machine is responsible for seeing this data off to OpenTSDB. Adding a new host to your infrastructure places no additional workload on any individual component of OpenTSDB.

Network connections time out. Collection services crash. How does OpenTSDB guarantee observation delivery? Attaining high availability, it turns out, is equally mundane. The tcollector daemon, also runs on each monitored host, takes care of these concerns by gathering measurements locally. tcollector handles other matters as well. More information can be found at http://opentsdb.net/tcollector.html. It is responsible for ensuring observations are delivered to OpenTSDB by waiting out such a network outage. It also manages collection scripts, running them on the appropriate interval or restarting them when they crash. As an added bonus, collection agents written for tcollector can be simple shell scripts.

Collector agents don’t write to HBase directly. Doing so would require the tcollector installation to ship an HBase client library along with all its dependencies and configuration. It would also put an unnecessarily large load on HBase. Since the tsd is already deployed to support query load, it is also used for receiving data. The tsd process exposes a simple telnet-like protocol for receiving observations. It then handles interaction with HBase.

The tsd does very little work supporting writes so a small number of tsd instances can handle many times their number in tcollector agents. The OpenTSDB write path is illustrated in figure 5(c).

Figure 5(c) OpenTSDB write path. Collection scripts on monitored hosts report measurements to the local tcollector process.

Measurements are then transmitted to a tsd process that handles writing observations to HBase. You should now have a complete view of OpenTSDB. More importantly, you’ve seen how an application can take advantage of HBase’s strengths. Nothing here should be particularly surprising, especially if you’ve developed a highly available system before. It’s worth noting how much simpler such an application can be when the data storage system provides these features out of the box.

Summary

HBase is a flexible, scalable, accessible database. You’ve just seen some of that in action. A flexible data model allows HBase to store all sorts of data, and time series is just one example. Its simple client API opens HBase to clients in many languages. HBase is designed for scale and now you’ve seen how to design an application to scale aright along with it.

Pingback: Software Development Linkopedia March 2012